Episode #661

October 2, 2021

In the beginning of computer graphics there were dots.

A computer screen lit a pixel and it was white.

If a pixel was not lit, it was black.

Thus, a pattern of black and white dots represented every graphic element.

Letters in words in sentences were made of black and white dots.

Any graphic was made of dots.

By rearranging the dots over time, the screen could be animated.

By rearranging the dots over time, the screen could be animated.

Since we lived in the age of paper, computer graphics had to be transferred to paper.

The paper was already white, so the printer only printed the black dots.

We called this a bit map and the printers produced a dot matrix.

Black and white was fine while it lasted, but our lives are in color.

Apple II provided a connector to support a color CRT (Cathode Ray Tube) monitor.

The Apple II was not powerful enough to actually drive the color monitor so color had to wait for more advanced programming and greater computing power.

The Apple II was not powerful enough to actually drive the color monitor so color had to wait for more advanced programming and greater computing power.

Instead of telling a pixel to light up or not the color program had to send instructions to at least three pixels to generate a color.

One white pixel = one byte (8 bits (ones or zeros)).

RGB (Red, Green, Blue) instructions require one byte each.

Three color pixels = three bytes (24 bits).

The higher the resolution of the monitor, the greater the number of bytes required to paint the screen.

Our modern 4K monitors require 1.6 million bits per screen at 30 screens per second.

48 million bits per second (6 megabytes per second) is a lot of computing power.

Color printer file sizes can be very large because instructions for C (Cyan), Y (Yellow), M (Magenta) and K (Black) are required.

In order to process print files more efficiently, Postscript was invented.

Instead of describing every dot in a line, Postscript describes the starting pixel and the ending pixel with a mathematical formula which draws all the dots in between.

This reduces the file size and processing time dramatically.

In some file formats, patterns of dots can be compressed into mathematical formulae which are smaller and more efficient than describing each of the pixels.

Our eyes collect visual data in a dot matrix.

A 4K monitor has a pixel density of about 100 ppi (pixels per inch).

Our eyes have a pixel density of about 300 ppi.

Considering that our vision is continuous (not limited to 30 frames per second), this is a tremendous amount of data to process.

So our brains process what we see conceptually, like postscript.

If we had to wait to “see” all the pixels in our view the latency (delay) would be too great.

Our instantaneous perception is essential to protect us from harm.

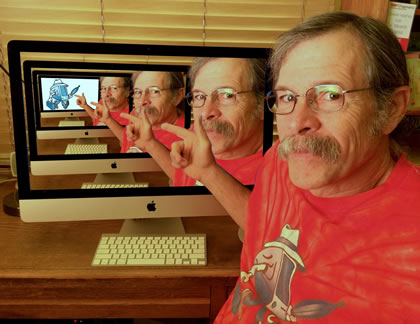

We have subroutines, like postscript formulae, in our visual processing.

There are three types of processing that join together to create the image in our minds eye.

- We store shapes which can be drawn from past experience and compared to what we are seeing.

- Colors are compared to colors we have seen before.

- Movement and location are processed like tweening.

Inbe”tweening” is a process used in animation where a starting graphic and an ending graphic is drawn. The computer then adds all the images in between. Just like postscript.

Yuo cna porbalby raed tihs esaliy desptie teh msispeillgns.

Using a combination of perception, experience and prediction the brain translates the jumbled words into a sentence.

Why decipher each letter when you can process a sentence conceptually?

To save time and processing power our brains make up most of what we perceive based on past experience.

Why see everything for the first time when you already know the formula for most of what you see?

Brings back good memories. I was one of the first people to write in Postscript code. The guys at Adobe were very impressed.

Vic

Rick – enjoyed this very much. Computers are an infinitesimally small analog when compared to the human brain, which is an infinitesimally small analog to the human spirit or soul. Thanks for your insights.